In many practical applications, machine learning models need to be trained with a lot of data, and this data is generally derived from some smart devices. If all data is collected to the central server for training, the central server calculation will be greatly burdened, and some smart device users will worry about privacy leakage. For this reason, federated learning has received a lot of attention in recent years.

Professor Zaiyue Yang’s research group from the Department of Mechanical and Energy Engineering at the Southern University of Science and Technology (SUSTech) recently published a research paper on federated learning with high communication efficiency.

Their research, entitled “Lazily Aggregated Quantized Gradient Innovation for Communication-Efficient Federated Learning,” was published in IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI), a top journal in the field of IEEE’s model recognition and machine learning.

Federated learning does not require the direct collection of the user’s original data, but only requires the user to calculate some information based on local data and upload it to the central server for model training (Figure 1). However, with the increase in the number of smart devices, the federated learning method requires a central server to communicate with a large number of devices during model training.

Since deep learning models (with a large number of learning parameters, often as many as 10^9-10^12 parameters) are widely used, the central server transmits a large amount of data each time it communicates with distributed devices. Consequently, in many practical applications, when using federal learning for model training, the communication burden is large, and the delay is long, which directly leads to a decline in learning efficiency. Therefore, the current federal learning method aims to reduce the calculation of the center server at a very high amount of communication and achieve user privacy protection.

Figure 1. The infrastructure of federated learning diagram.

The main goal of the study was to reduce the amount of communication in federal learning. It considers the basic gradient descent algorithm and uses two methods to reduce the traffic. Firstly, to quantify the gradient information; and secondly, to design an adaptive communication mechanism.

By quantizing the gradient, such as using 6 bits instead of 64 bits to represent a floating-point number, the number of bits transmitted per communication can be greatly reduced. By designing the adaptive communication mechanism, the number of communication times can be effectively reduced. For example, if the information transmitted twice in a row is very similar, then you can consider saving the next communication and directly use the previous information as the current similar. Both methods will introduce errors to the gradient, but this study demonstrates that with proper design, the convergence of the training process is guaranteed, and for strongly convex optimization problems, the proposed algorithm is able to guarantee linear convergence (exponential convergence or geometric convergence).

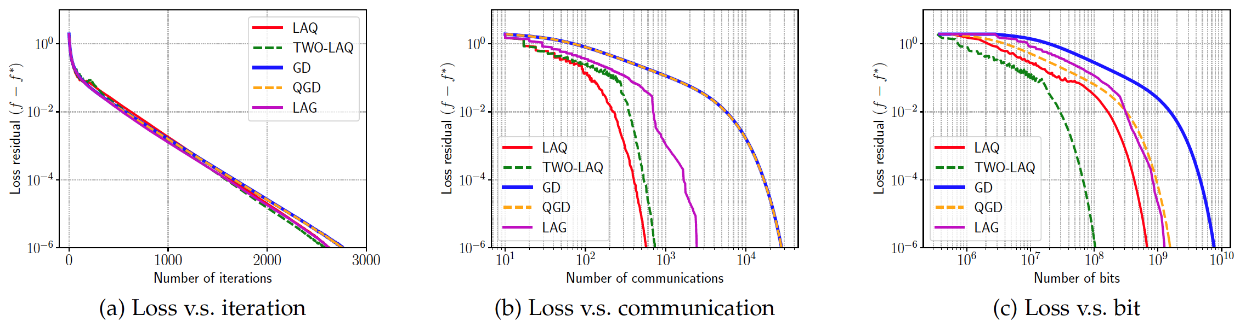

This study explicitly characterizes the relationship between the rate of convergence and the design parameters. The experimental results shown in the figure below verify the convergence of the algorithm, and the proposed method significantly reduces the total traffic of the training process (LAQ and TWO-LAQ in the figure are the algorithms proposed by the research group) compared with the existing methods.

Figure 2. Convergence of the loss function (a) Loss v.s. iteration. (b) Loss v.s. communication. (c) Loss v.s. bit.

This research greatly reduces the amount of communication in federated learning based on ensuring the learning effect of the model, generally reaching more than 50%, thereby reducing the communication bandwidth requirements between the server and the device, and also shortening the training time. The work has strong theoretical and engineering significance.

The work was jointly completed by SUSTech, Zhejiang University (ZJU), Lenisler Institute of Technology (RPI), and the University of Minnesota (UMN). Jun Sun, a visiting student of SUSTech, is the first author of this paper. Prof. Zaiyue Yang is the corresponding author, and SUSTech is the first affiliation.

This research was funded by the National Natural Science Foundation of China (NSFC), the International Collaboration Project of Guangdong Province, and the International Collaboration Project of Shenzhen Municipality.

Paper links: https://ieeexplore.ieee.org/abstract/document/9238427

To read all stories about SUSTech science, subscribe to the monthly SUSTech Newsletter.

Proofread ByAdrian Cremin, Yingying XIA

Photo By