In daily life, people with disabilities in both arms need to grasp objects when there is no one to assist them. To meet this need, a potential solution is to let robots assist them in grasping these target objects. One of the key issues is how to obtain the operation intention of human grasping and enable the robot to accurately understand and execute it.

Currently, many studies that aim at helping these disabled people, control robots to complete grasping tasks by obtaining human physiological signals, such as electroencephalography (EEG) and electromyography (EMG). However, due to the individual differences and instability of these signals, it is difficult for them to control the robotic arm to effectively grasp objects of various sizes and shapes, including transparent and specular objects that are densely placed in daily life.

Chengjie Zhang, an undergraduate student from the Department of Mechanical and Energy Engineering at the Southern University of Science and Technology (SUSTech), recently published his research work that innovatively combines head-based control and mixed reality feedback to design a human-robot interface to help people with disabilities in both arms effectively grasp everyday objects.

This research work, entitled “An Effective Head-Based HRI for 6D Robotic Grasping Using Mixed Reality,” has been published in IEEE Robotics and Automation Letters, an international journal on robotics.

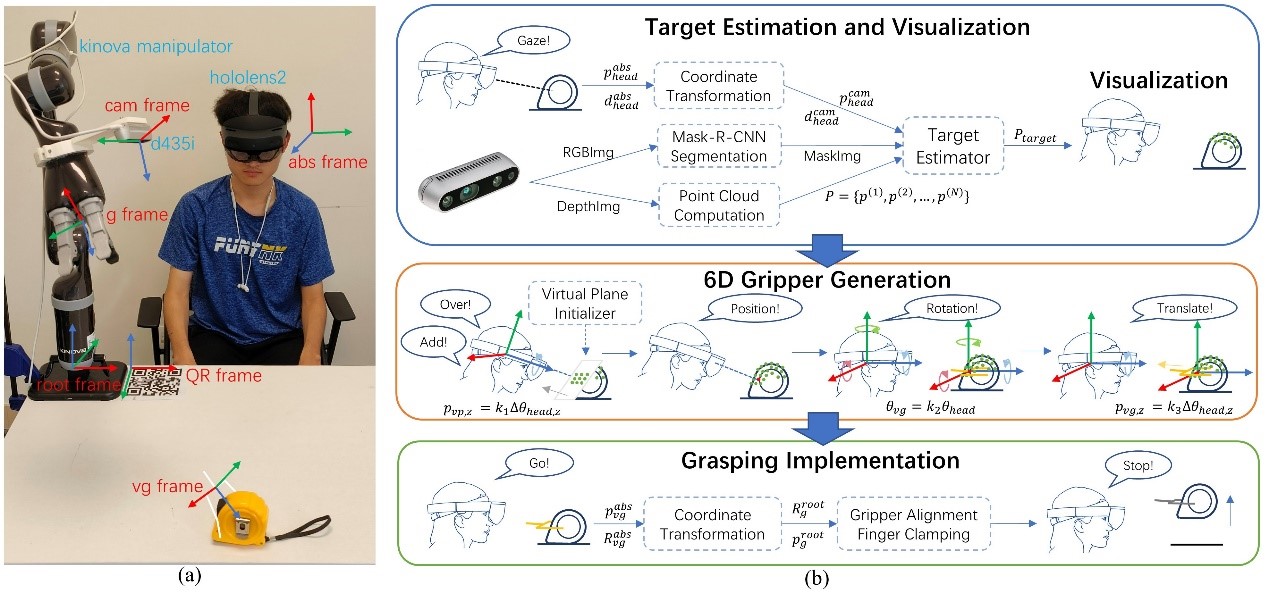

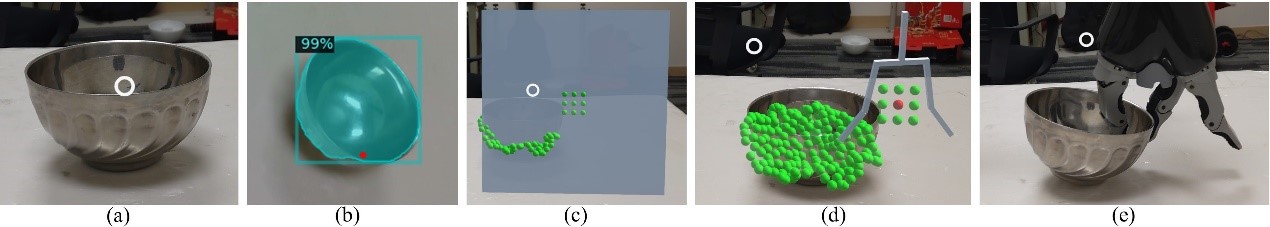

In this study, Chengjie Zhang combines head-based control and mixed reality feedback to add human perception and decision-making abilities into robotic grasping control, thus improving the robot’s grasping ability. Operators can use mixed reality feedback to visualize the perception result (point cloud of objects) and its deviation from the real object. With this feedback, operators can intuitively use head gaze and rotation to control the 6D grasping pose of the robotic arm, thereby achieving grasping of complex objects.

Figure 1. Scene overview (a) and system framework (b)

Figure 2. Control step diagram (a)-(e)

Through the design of grasping experiments and comparative analysis, the proposed human-robot interface is proven to be more effective in grasping common objects in daily life, and has an adaptability to unknown objects and a high tolerance for perception errors.

Video 1. Demonstration with actual control performance

Chengjie Zhang is the first author of this paper. Prof. Chenglong Fu from the Department of Mechanical and Energy Engineering at SUSTech is the corresponding author.

This research was supported by the National Natural Science Foundation of China (NSFC), Shenzhen Science and Technology Innovation Commission, and the Guangdong Province College Student Climbing Program.

Paper link: https://ieeexplore.ieee.org/document/10080982

To read all stories about SUSTech science, subscribe to the monthly SUSTech Newsletter.

Proofread ByAdrian Cremin, Yingying XIA

Photo By