Associate Professor Jin ZHANG and her research team from the Department of Computer Science and Engineering at the Southern University of Science and Technology (SUSTech) have made significant strides in the field of ubiquitous computing.

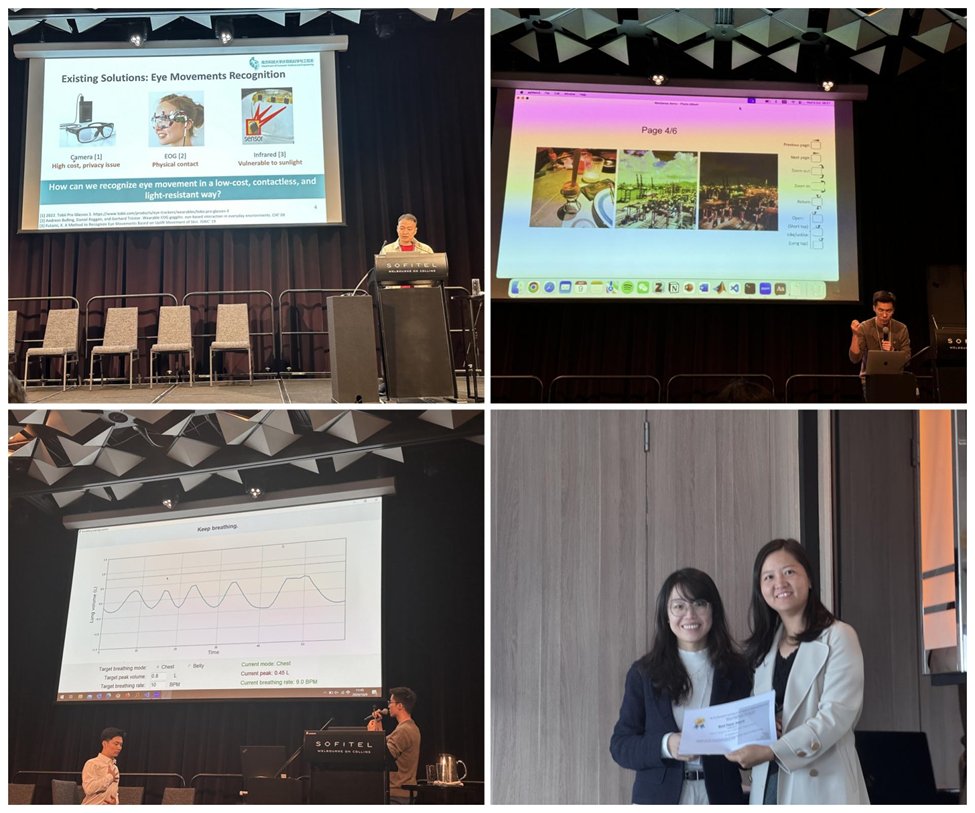

Three of their papers were recently accepted at the prestigious Ubicomp/ISWC 2024 conference, and another paper won the Best Paper Award at the WellComp Workshop, co-organized by Ubicomp 2024.

From left to right: Chi XU, Wentao XIE, Associate Professor Jin ZHANG, and Tao SUN

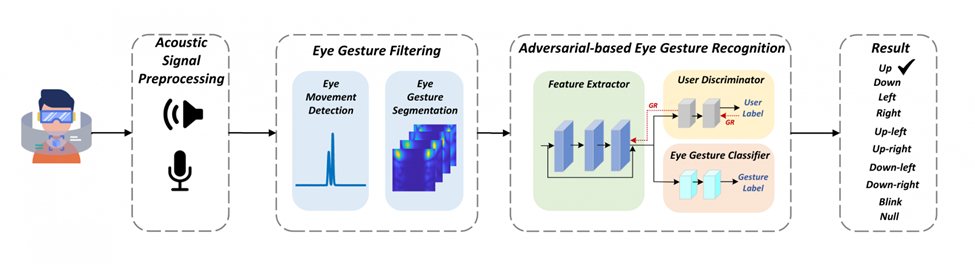

Paper 1: EyeGesener: Eye Gesture Listener for Smart Glasses Interaction using Acoustic Sensing

Current smart glasses rely on gestures or voice commands, which is not ideal in scenarios when the user’s hands are occupied. The research team developed EyeGesener, an eye movement gesture recognition system based on acoustic perception.

The system works by detecting subtle skin movements around the eyes via speakers and microphones embedded in the glasses. Using advanced orthogonal frequency-division multiplexing technology, the system accurately identifies intentional eye movements for interaction, differentiating them from regular eye movements. Additionally, an adversarial training strategy ensures that the system adapts to different users without the need for retraining, improving usability across a wide range of people.

The research team collected experimental data from 16 participants, and the results showed that EyeGesener was able to accurately recognize eye movement gestures with an F1 score of 0.93 and a false positive rate of just 0.03. This breakthrough paves the way for more intuitive and hands-free interaction with smart glasses, with applications spanning augmented reality (AR), virtual reality (VR), and intelligent auxiliary devices.

Master’s students Tao SUN and Yankai ZHAO are the co-first authors of this paper. Associate Professor Jin ZHANG is the corresponding author.

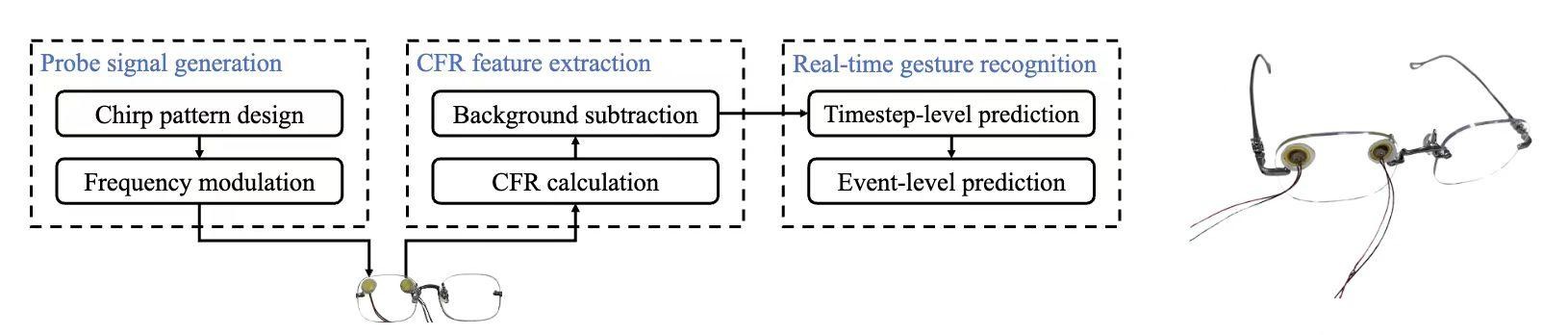

Paper 2: RimSense: Enabling Touch-based Interaction on Eyeglass Rim Using Piezoelectric Sensors

In commercial smart glasses, touch panels are typically located on the temple arms, separate from the display, which can make interaction less intuitive. To address this, the research team developed RimSense, a proof-of-concept system that transforms the rim of the glasses into a touch-sensitive surface.

Using piezoelectric sensors, RimSense recognizes touch gestures through channel frequency response, allowing for seamless interaction directly on the eyeglass frame. The system enhances perception accuracy and reduces noise using buffered chirp signals. It also incorporates a deep learning framework integrated with a finite state machine algorithm to predict touch events at a high level.

A functional prototype was created using two commercially available PZT sensors, capable of recognizing eight distinct touch gestures and estimating gesture duration, enabling varied inputs based on gesture length.

Testing with 30 participants showed impressive results, with an F1 score of 0.95 for gesture recognition and an 11% error in duration estimation. User feedback indicated that RimSense is user-friendly, easy to learn, and enjoyable to use, offering valuable insights for future eyewear design.

Wentao XIE, a joint doctoral student at SUSTech and Hong Kong University of Science and Technology (HKUST), is the first author of this paper. Associate Professor Jin ZHANG is the co-corresponding author, with SUSTech serving as the first affiliated institution for this work.

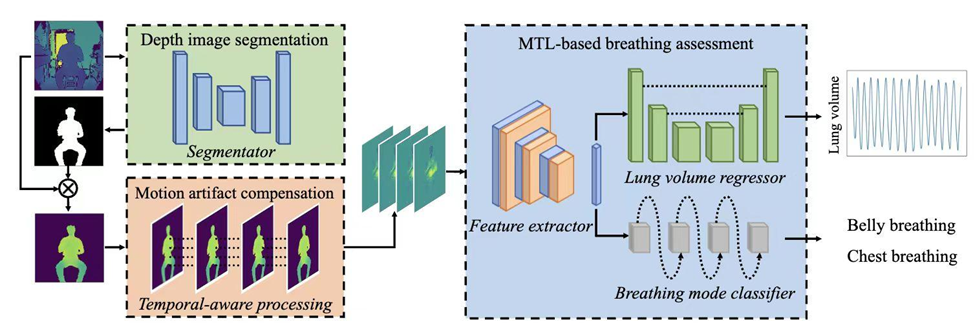

Paper 3: DeepBreath: Breathing Exercise Assessment with a Depth Camera

Breathing exercises play a crucial role in improving lung function for individuals with chronic obstructive pulmonary disease (COPD). Two key indicators for assessing these exercises are the breathing pattern (chest or abdominal) and lung capacity. To facilitate this evaluation, the research team developed DeepBreath, a system that uses a depth camera to assess breathing exercises.

DeepBreath enhances respiratory pattern classification using a multi-task learning framework, which considers the relationship between breathing patterns and lung capacity. For calibration-free lung capacity measurement, the system employs a data-driven approach powered by an innovative UNet deep learning model, allowing for universal estimation. Additionally, a lightweight contour segmentation model transfers knowledge from advanced models to improve accuracy.

To address issues with involuntary movements, the system integrates a time-aware body motion compensation algorithm. In collaboration with a clinical center, the research team conducted experiments on 22 healthy individuals and 14 COPD patients, demonstrating that DeepBreath delivers highly accurate breathing metrics in realistic conditions.

Wentao XIE and Chi XU, a former undergraduate from SUSTech (now a Ph.D. student at HKUST), are the co-first authors of this paper. This research was supervised under the guidance of Associate Professor Jin ZHANG and Chair Professor Qian ZHANG of HKUST.

Paper 4: AcousAF: Acoustic Sensing-Based Atrial Fibrillation Detection System for Mobile Phones

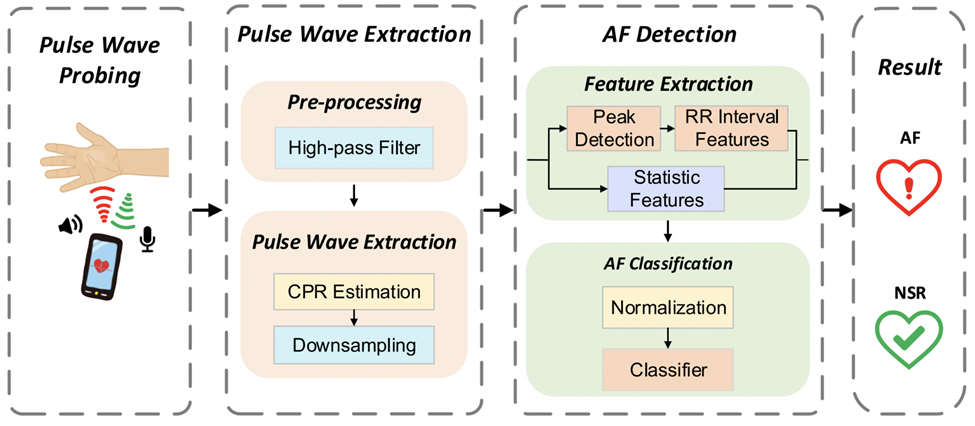

This study introduces AcousAF, an innovative atrial fibrillation detection system designed for smartphones, effectively overcoming the limitations associated with traditional heart monitoring methods and paving the way for enhanced atrial fibrillation observation.

The system utilizes the smartphone’s speaker and microphone to capture pulse waves from the wrist. It integrates meticulously developed algorithms for pulse wave detection, extraction, and atrial fibrillation diagnosis to ensure both accuracy and reliability.

In a comprehensive experimental study involving 20 participants, the research team demonstrated the system’s exceptional performance, achieving a detection accuracy of 92.8%. This significant achievement earned them the WellComp Best Paper Award.

Undergraduate student Xuanyu LIU at SUSTech is the first author of this paper. Associate Professor Jin ZHANG is the corresponding author.

UbiComp is a leading international conference hosted by the Association for Computing Machinery (ACM), which is recognized for its focus on human-computer interaction and ubiquitous computing. It is also classified as a Class A conference by the China Computer Federation (CCF).

The conference encompassed a broad range of topics, including the design, development, and deployment of ubiquitous and wearable computing technologies, as well as the examination of their human experiences and societal impacts.

Paper links (In order of appearance above):

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies: https://doi.org/10.1145/3678541

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies: https://doi.org/10.1145/3631456

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies: https://doi.org/10.1145/3678519

UbiComp 2024 WellComp Workshop: https://dl.acm.org/doi/10.1145/3675094.3678488

Proofread ByAdrian Cremin, Yingying XIA

Photo ByDepartment of Computer Science and Engineering