The global cloud computing market has experienced exponential growth over the past few years. However, the lack of trust in the cloud operators has always been a major obstacle for many data owners to embrace cloud technology, especially in finance and healthcare settings where data security is of concern. To address this trust issue, the concept of confidential computing has been proposed, which is a norm of computation that preserves the confidentiality of the computation and data on untrusted computing platforms.

Professors Yinqian Zhang and Fengwei Zhang’s research team from the Department of Computer Science and Engineering (CSE) and the Sfakis Research Institute of Trustworthy Autonomous Systems at the Southern University of Science and Technology (SUSTech) have recently made a breakthrough in the research field of confidential computing.

They have collaborated with scholars and researchers from several top universities, including Duke University, Hong Kong University of Science and Technology, George Mason University, Ohio State University, Hong Kong Polytechnic University, Singapore Management University, Hunan University, Vanderbilt University, Guangzhou University, and the Ant Group.

As a result, they have published a number of heavyweight articles at international top computer security academic conferences, including the IEEE Symposium on Security and Privacy, ACM Conference on Computer and Communications Security, and USENIX Security Symposium.

Distributed confidential computing security framework.

Byzantine Fault Tolerant (BFT) is a distributed protocol with high reliability. It has important applications in blockchain and distributed file systems, but its performance bottleneck and low scalability have been restricting its application. In contrast, although Crash Fault Tolerant (CFT) has better performance, it only allows crashes and does not support applications under decentralized trust architecture such as blockchain.

The researchers combined confidential computing and distributed systems, using the efficient CFT protocol to achieve high-security BFT by virtue of the code integrity provided by the Trusted Execution Environment (TEE). However, a simple fusion of CFT and TEE does not equal BFT. The team uses a formal verification method called model checking to check the correctness of the “CFT+TEE=BFT” equation. Specifically, this work used the TLC model checker to model the behavior of Raft, a widely used CFT protocol, in a trusted execution environment and found safety and liveness vulnerabilities.

This is the first successful application of model checking to the Raft protocol. Based on the model checking results, the team designed the ENGRAFT system, the first TEE-based Raft security implementation. Two sub-systems are designed within ENGRAFT: Trustworthy distributed in-memory Key-value Storage (TIKS) in Figure 1 and Malicious Leader Detector (MLD). Vulnerabilities in the safety and liveness of Raft protocol in trusted execution environments have been fixed. The proposed ENGRAFT system provides a reference for the “CFT+TEE” design paradigm, which can be used as the underlying consensus algorithm of the consortium chain to support practical applications.

These research results were presented at the ACM Conference on Computer and Communications Security (ACM CCS 2022) under the title “ENGRAFT: Enclave-guarded Raft on Byzantine Faulty Nodes”.

Weili Wang and Sen Deng from the Department of CSE at SUSTech are the co-first authors of this study. Prof. Yinqian Zhang is the corresponding author.

Figure 1. Message interaction of TIKS

Another work of the research team in distributed confidential computing is to leverage distributed systems to protect confidential computing from forking attacks and rollback attacks. Public cloud platforms, such as Microsoft Azure and Alibaba Cloud, have already leveraged TEEs to provide confidential computing services, which aims to boost clients’ confidence in their outsourced data or code. For example, in a payment system implemented in TEEs, an adversary can spend the same coins in multiple payments by reverting the states of its account balance (i.e., double-spending attacks). While prior works aim to provide state continuity for TEEs, they all have severe performance bottlenecks and strong trust assumptions.

To address these issues, the researchers present Narrator (Figure 2), a system based on decentralized trust to provide performant state continuity protection for cloud TEEs. There are two key ideas behind the design. First, it keeps the interaction with blockchain rare and outside the critical path of frequent state updates or reads. To this end, it only uses an external blockchain to initialize a distributed system of TEEs, laying down the decentralized trust anchor (instead of recording frequent state updates). Second, the distributed system initialized by the blockchain can work as a trusted system to provide state continuity protection for clients’ TEE applications.

These research results were presented at ACM CCS 2022 under the title “Narrator: Secure and Practical State Continuity for Trusted Execution in the Cloud”.

Jianyu Niu from the Department of CSE at SUSTech is the first author of this study. Prof. Yinqian Zhang is the corresponding author.

Figure 2. A system architecture of Narrator

GPU Trusted Execution Environment

To satisfy the rapidly increasing computing performance requirements, current servers and endpoints mainly use GPUs to process several high-performance computing, such as neural network training and inference, 3D games, etc. However, the current GPU is completely controlled by a vulnerable operating system (e.g., Linux). Once an attacker breaches the operating system, the data processed in the GPU can be directly compromised. Therefore, today’s servers and endpoints urgently need to build a TEE for GPUs to ensure data security.

Recent GPU TEEs are mainly based on the x86 architecture and deployed on GPUs with dedicated memory, such as NVIDIA. Thus, they can leverage the hardware isolation of GPUs to ensure secure computation. However, endpoint GPUs, such as Arm Mali, lack such isolation due to sharing memory with the CPU, and hence they require a stronger defense mechanism against the compromised system. In addition, the current design of GPU TEEs mainly relies on x86 hardware features, such as Intel SGX, and hardware changes, incurring considerable challenges to migrate them on Arm endpoints.

Based on the problems, the research team proposed a GPU TEE on Arm endpoints called StrongBox. StrongBox is the first domestic lightweight GPU TEE. It reuses the GPU driver to perform non-sensitive operations, such as memory resource allocation and task scheduling. At the same time, it deploys small-sized components (GPU Guard and Task Protector on the left of Figure 3) in the trusted execution environment (EL3 on the left of Figure 3) for security purposes. Meanwhile, StrongBox leverages Arm architecture features (S-2 address translation and TZASC on the right side of Figure 3) to formulate a precise protection for GPU computing but does not require hardware modification. Experimental results show that StrongBox ensures the security of GPU computing with low-performance overhead.

These research results were presented at ACM CCS 2022 under the title “StrongBox: A GPU TEE on Arm Endpoints”.

Yunjie Deng and Chenxu Wang from the Department of CSE at SUSTech are the co-first authors of this study. Prof. Fengwei Zhang is the corresponding author.

Figure 3. StrongBox design overview

Key technologies for security analysis and protection of confidential computing

Many cloud applications, such as machine learning, tend to achieve secure computing service by running sensitive data and code in Intel Software Guard Extensions (SGX)-based TEE. However, the cloud user is hard to check the runtime SGX enclave memory with many challenges.

First, SGX shields the enclave contents against any software accessible from the outside, which is supported by hardware isolation and encryption. Second, there is no known solution to determine the enclave location when the kernel is untrusted. In addition, side-channel or replay attacks may lead to a false enclave memory introspection.

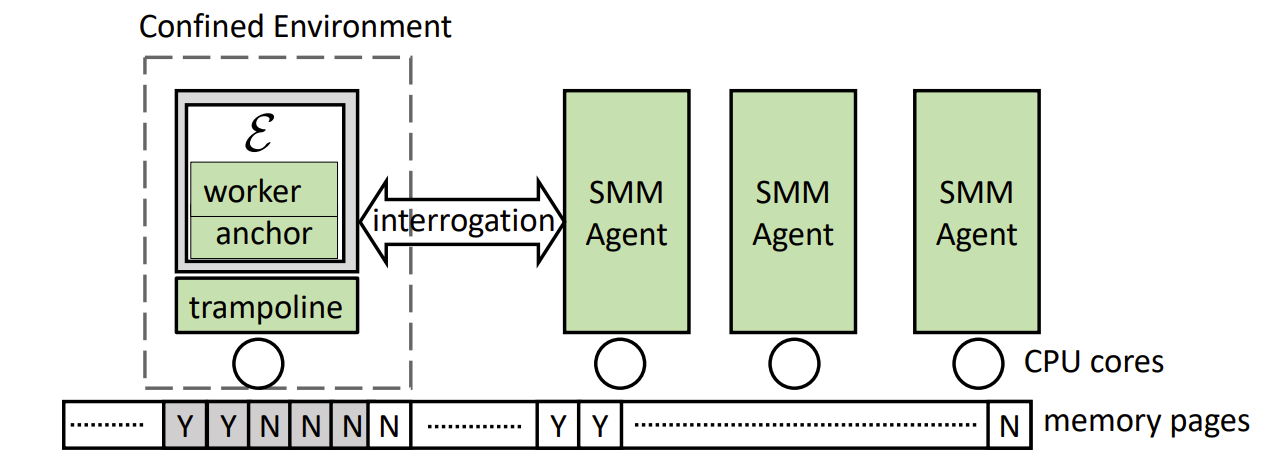

To overcome the above challenges, Prof. Fengwei Zhang’s team presents a system called Secure Memory Introspection for Live Enclave (SMILE). SMILE is deployed in the x86-based cloud platform with system management mode (SMM) and Intel SGX. SMILE relies on an agent running in the enclave platform’s SMM, and the agent is semi-trusted as it is trusted to run SMILE but not trusted to learn enclave secrets (Figure 4). SMILE constructs the confined interrogation environment that harnesses system security and cryptography to securely bootstrap trust on an enclave, authenticate its identity, and verify runtime code integrity. The experimental results show that SMILE trusty introspects the enclave runtime memory with acceptable overhead.

The research results were presented at IEEE Symposium on Security and Privacy 2022 (IEEE S&P 2022) under the title “SMILE: Secure Memory Introspection for Live Enclave”.

Lei Zhou from the Department of CSE at SUSTech is the first author of this study. Prof. Fengwei Zhang is the corresponding author.

Figure 4. A system view of confined interrogation

Another work of the research team in the security analysis and protection of confidential computing is to propose an automated detection framework for ciphertext side-channel vulnerabilities. As a representative side-channel attack, a ciphertext side channel is a serious threat to the security of a TEE with deterministic memory encryption mode. It has been shown that ciphertext side-channel attacks can be implemented on almost all trusted execution environments using deterministic memory encryption mode, including AMD SEV-SNP, ARM CCA, and so on.

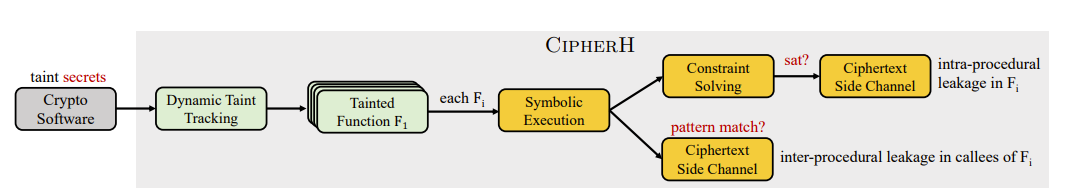

To solve this problem, they proposed an automated detection framework for ciphertext side channels to help developers locate ciphertext side-channel vulnerabilities in the software. CipherH qualitatively models the ciphertext side-channel vulnerability, which leverages symbolic constraints to judge whether continuous memory writing operations will lead to ciphertext side channels from the attacker’s perspective (Figure 5). Based on the qualitative model, an automatic detection tool is designed and implemented. Specifically, the possible propagation path of private information in the program is analyzed based on dynamic taint tracking, and hence “tainted” functions are obtained. After that, these functions are executed with symbols within and between procedures to locate the vulnerable program points.

In this work, three mainstream encryption libraries are analyzed, and a number of vulnerable program points are found, through which attackers can successfully launch ciphertext side-channel attacks. These findings have been reported to the community, receiving positive responses and acknowledgments.

These research results will be presented at the USENIX Security Symposium 2023 (USENIX Security ’23) under the title “CIPHERH: Automated Detection of Ciphertext Side-channel Vulnerabilities in Cryptographic Implementations”.

Sen Deng from the Department of CSE at SUSTech is the first author of this study. Prof. Shuai Wang from the Department of CSE at HKUST and Prof. Yinqian Zhang are the co-corresponding authors.

Figure 5. Automated detection framework for ciphertext side channels

The above works were partly supported by the National Natural Science Foundation of China (NSFC) and Science, Technology and Innovation Commission of Shenzhen Municipality, and the SUSTechCSE-Ant Group Joint Research Platform on Secure and Trust Technologies.

Paper links (In order of appearance above):

ACM CCS 2022: https://github.com/teecertlab/papers/tree/main/engraft

ACM CCS 2022: https://github.com/teecertlab/papers/tree/main/Narrator

ACM CCS 2022: https://fengweiz.github.io/paper/strongbox-ccs22.pdf

S&P 2022: https://cse.sustech.edu.cn/faculty/~zhangfw/paper/smile-sp22.pdf

USENIX Security ’23: https://github.com/teecertlab/papers/tree/main/CipherH

To read all stories about SUSTech science, subscribe to the monthly SUSTech Newsletter.

Proofread ByAdrian Cremin, Yingying XIA

Photo By