The research hotspot in the field of embodied intelligence has focused on constructing general-purpose embodied agents using large pre-trained foundation models, which are expected to replace humans in performing some tasks in daily life. These models, such as Large Language Models (LLMs), benefit from the extensive prior knowledge they learn from the Internet-scale pre-training data, enabling them to complete various tasks according to the feedback from external environments, such as code generation, product recommendations, and even robot manipulation. Furthermore, by integrating with Vision-Language Models, agents can directly understand visual inputs and perform reasoning, planning, and execution of tasks.

However, existing work overlooks the fact that the real world is constantly evolving. Therefore, foundation models trained on pre-collected static datasets cannot align with the dynamic evolution of the real world. When using these foundation models as agents to solve tasks, they may suffer from serious hallucination issues (e.g., generating errors, non-existent, or misleading information).

To bridge this gap, Chair Professor Yuhui Shi’s research group from the Department of Computer Science and Engineering at the Southern University of Science and Technology (SUSTech) has recently explored the possibility of using the state-of-the-art Large Language Model (e.g., GPT4) as a “teacher” to train embodied agents through interactive cross-modal imitation learning in a dynamic world. As a result, their method effectively aligns the agent’s behavior with the evolution of the real world.

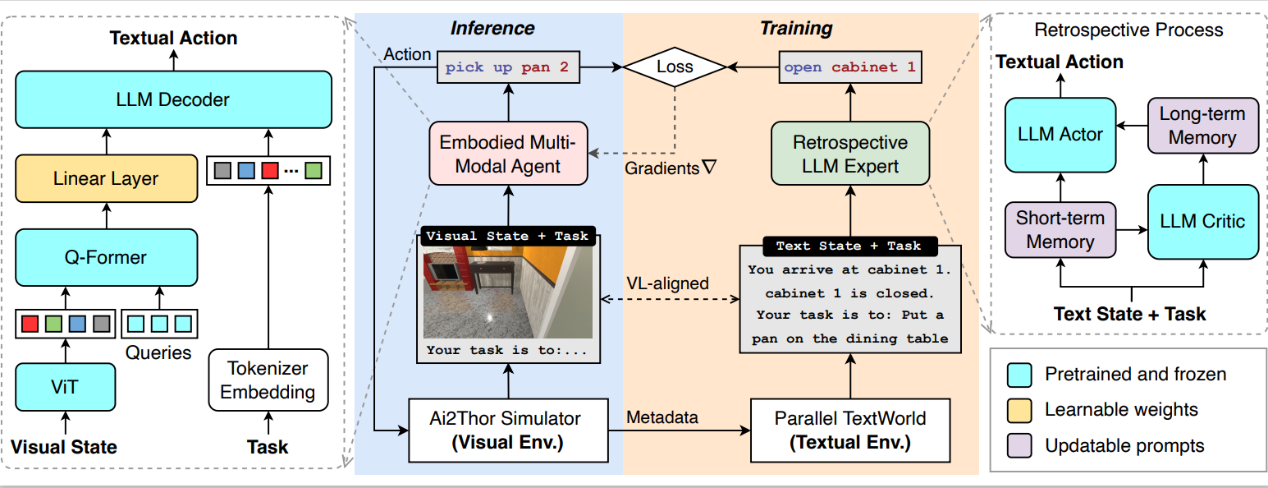

Figure 1. Depiction of Interactive Cross-Modal Imitation Learning

Figure 1 illustrates the core idea of the embodied agent training framework proposed by the researchers. The agent (red module in the figure) is built on a vision-language model, which can follow task instructions provided by users and interact with the environment through visual observation and text actions. To overcome the challenges associated with directly training agents from visual input states, such as sparse rewards, distribution shifts, and hallucination issues, they used Planning Domain Definition Language (PDDL) to convert each frame of the visual state into an equivalent abstract text description. This is then fed to an LLM expert implemented with GPT-4 (green module in the figure), which can generate improved text actions for the current environmental state to guide and correct the agent’s behavior. In a diverse set of householding scenarios, the agents’ task success rate was significantly improved by 20%-70% compared to the state-of-the-art baselines.

Figure 2. A variety of householding scenarios where the agent is required to complete various household tasks

Their paper, entitled “Embodied Multi-Modal Agent trained by an LLM from a Parallel TextWorld”, has been accepted by the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2024, one of the most influential academic conferences in the field of artificial intelligence, ranking 4th in the Google Scholar Metrics impact ranking, just behind Nature, the New England Journal of Medicine, and Science.

The work was a collaborative effort between SUSTech, the University of Maryland, College Park (UMD), the University of Technology Sydney (UTS), and JD Explore Academy.

The first author of the paper is Yijun Yang, a Ph.D. candidate from the Department of Computer Science and Engineering at SUSTech, with Chair Professor Yuhui Shi as the corresponding author. SUSTech is the first corresponding institute of the paper.

Chair Professor Yuhui Shi joined the Department of Computer Science and Engineering at SUSTech in September 2016. He has achieved numerous academic results in the realms of swarm intelligence, computational intelligence, and evolutionary computation. In 1998, he proposed an improved Particle Swarm Optimization algorithm with Professor Eberhart, which has been widely used by most Particle Swarm Optimization researchers. He is the founder of the Brainstorm Optimization algorithm. To date, he has published over 200 papers in top international academic journals and conferences, with a Google total citation count exceeding 58,000 times and an H-index of 49.

Paper link: https://arxiv.org/abs/2311.16714

To read all stories about SUSTech science, subscribe to the monthly SUSTech Newsletter.

Proofread ByAdrian Cremin, Yingying XIA

Photo ByDepartment of Computer Science and Engineering