Understanding how people move across cities is a vital challenge for urban planners and policymakers across the world. Intelligent transformation systems have been implemented to try and plan transportation systems for population growth and other demographic changes. However, there are numerous issues with these systems.

Recently, Assistant Professor James J.Q. Yu (Computer Science and Engineering) led his research group to make a series of research progress in the field of intelligent transportation systems (ITS). Their papers were published in high-impact academic journals and world-class conferences, including the IEEE Internet of Things Journal (IF = 9.936), IEEE Transactions on Intelligent Transportation Systems (IF = 6.319), and Transportation Research Part C: Emerging Technologies (IF = 6.077).

One of the primary challenges in ITS research is predicting the state of traffic. Dr. Yu team’s paper “Privacy-Preserving Traffic Flow Prediction: A Federated Learning Approach,” published in the IEEE Internet of Things Journal, examined this issue.

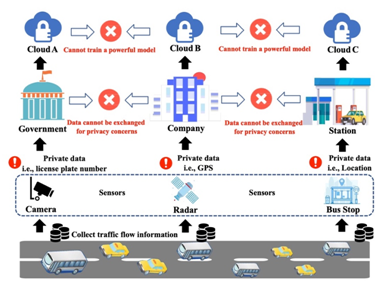

Existing traffic forecasting approaches by deep learning models achieve excellent success based on a large volume of datasets. However, those studies have generally not been cleansed of user data, resulting in significant data privacy risks. As a result, there is a lack of interconnectedness between datasets, as different groups are unable to share data due to privacy issues.

Figure 1 Privacy and security problems in traffic flow prediction.

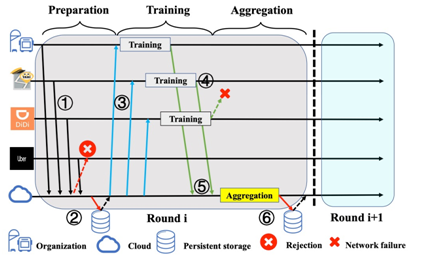

The research group sought to solve this issue by proposing a Federated Learning-based Gated Recurrent Unit neural network algorithm (FedGRU) for traffic flow prediction under the privacy preservation constraint.

This algorithm differs from current centralized learning methods. It updates universal learning models through a secure parameter aggregation mechanism rather than directly sharing raw data among organizations. The algorithm adopts a Federated Averaging algorithm to reduce the communication overhead during the model parameter transmission process. It incorporates a Joint Announcement Protocol to improve the scalability of the prediction model. Compared to conventional methods, this algorithm develops accurate traffic predictions without compromising the data privacy.

Figure 2 Federated learning-based traffic flow prediction architecture.

Figure 3 Federated learning joint-announcement protocol.

The first author of the paper is visiting SUSTech student Yi Liu. The corresponding author is Dr. Yu. Additional contributions came from Nanyang Technological University. SUSTech is the first corresponding unit.

Another aspect of managing traffic is tracking the speed of traffic in real-time. It is the foundation of controlling transportation and managing transport applications. IEEE Transactions on Intelligent Systems published a paper titled, “Real-Time Traffic Speed Estimation With Graph Convolutional Generative Autoencoder.”

The existing solutions focus on stationary speed sensors and GPS records. However, this model requires vast amounts of data that are not available for all providers, while risking user privacy. Given population density, it is highly likely that rural roads are not adequately covered compared to urban roads.

The research group proposed a novel deep-learning model called Graph Convolutional Generative Autoencoder (GCGA). They integrated recent developments in deep-learning techniques to extract the spatial correlation of the transportation network. It used inputs from incomplete historical data and provide real-time GPS records for speed estimation over a large region.

The model adopted GCN and GAN design principles to extract the graph-related spatial characteristics of transportation networks. The incomplete graph input data meant that a practical GCGA training methodology was proposed to fine-tune the network parameters.

Compared to traditional methods, the proposed model can extract the spatial features of transportation networks to develop traffic speed maps. The model can relax the dependency on stationary speed sensors and fully utilize the dynamic, independent, and incomplete vehicular GPS records.

The simulation results demonstrate that the proposed model can notably outperform existing traffic speed estimation and deep-learning techniques. It is a promising opportunity that could apply the methodology in solving other research and industrial problems.

Figure 4 GCGA model architecture.

Dr. Yu is both the first author and the corresponding author. The co-author is Dr. Jiatao Gu, Facebook AI Research research scientists and the Department of Electrical and Electronic Engineering of the University of Hong Kong (HKU).

Understanding how people move between different forms of transport is vital information for urban planners and policymakers. “Travel Mode Identification with GPS Trajectories using Wavelet Transform and Deep Learning,” was published in IEEE Transactions on Intelligent Transportation Systems.

Current studies focus on GPS-based identification data of individual travel patterns. However, they suffer from a range of other problems, such as limited features, high data dimensionality, and under-utilization.

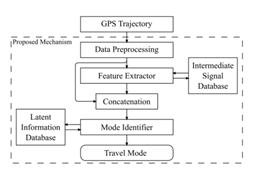

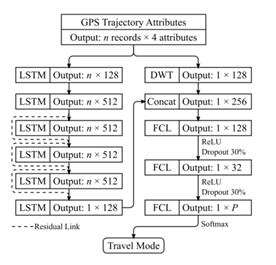

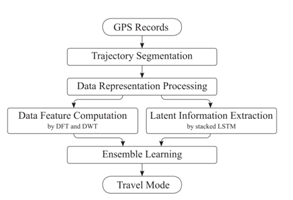

The research group proposed a travel mode identification mechanism based on discrete wavelet transform (DWT) and recent developments of deep neural networks (DNN) techniques to obtain accurate results. It is a pioneering study applying wavelet transform and recurrent neural networks in travel mode identification.

DWT provides extra data features for DNN to distinguish different modes because of its outstanding frequency-domain feature extraction capability. The proposed mechanism utilizes temporal correlations in GPS trajectories to train an intelligent system for identification, without needing to fix trajectory lengths. The whole identification process can be conducted in real-time due to the employment of DWT and DNN. The results indicate that the mechanism can outperform existing travel mode identifications within the same data set with little computation time.

Figure 5 Data flow of the proposed travel model identification mechanism.

Figure 6 Framework of the proposed travel mode identifier.

The sole author of this paper is Dr. Yu.

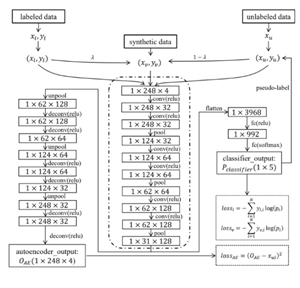

Many identification approaches to intelligent transportation have relied on the manual annotation of vehicular trajectories with relevant details. However, it is cost-inefficient and error-prone. The research group published a paper titled, “Semi-supervised Deep Ensemble Learning for Travel Mode Identification” in the high-impact academic journal, Transportation Research Part C: Emerging Technologies.

They hypothesized a unique semi-supervised deep ensemble learning-based travel mode identification approach. The identifier focused on producing proxy labels for unlabeled data, which can be used as training targets together with the original annotated data. It constructs a neural network ensemble of four networks to generate proxy labels for unlabeled data. The identification method is based on the knowledge of existing but scarce travel mode label information in the data set. These networks collaborate to determine the credibility of proxy labels, and those reliable labels are included in the subsequent training process for data augmentation.

Figure 7 Data processing flow of the proposed identifier.

The team used a series of case studies based on the GeoLife GPS Trajectory Dataset to demonstrate the effectiveness of the approach. When compared to state-of-the-art approaches for travel mode identification, the proposed one surpasses all others with semi-supervised learning tests under all data set configurations.

The sole author of this paper is Dr. Yu.

The research group has submitted a paper titled, “MultiMix: A Multi-Task Deep Learning Approach for Travel Mode Identification with Few GPS Data,” for the upcoming 23rd IEEE International Conference on Intelligent Transportation Systems (IEEE ITSC 2020). The paper proposed a learning model of travel pattern recognition through semi- and unsupervised learning methods.

MultiMix is a semi-supervised multi-task learning framework for travel mode identification. The framework trains a deep autoencoder with different labeled and unlabeled datasets by optimizing three corresponding objective functions. The results show that the mixed-data, multi-task learning approach has the best recognition performance compared with the previous approach.

The first author of the paper is postgraduate student Xiaozhuang Song. The corresponding author is Dr. Yu, and the co-author is UTS-SUSTech joint doctoral candidate Christos Markos.

The other paper to be published at IEEE ITSC 2020 is entitled “Unsupervised Deep Learning for GPS-based Transportation Mode Identification.” It is the first work that leverages unsupervised deep learning for the clustering of GPS trajectory data based on transportation mode.

The paper proposes to pre-train a deep convolutional autoencoder (CAE) using fixed-size trajectory segments. The CAE attaches a clustering layer to the embedding layer, the former maintaining cluster centroids as trainable weights. A composite clustering model is retrained, encouraging the encoder’s learned representation of the input data to be clustering-friendly. That strikes a balance between the model’s reconstruction and clustering losses. Experiments show this approach is superior to traditional clustering algorithms and semi-supervised technology in traffic pattern recognition. It can achieve a competitive recognition accuracy without using any labels.

The first author of the paper is UTS-SUSTech joint doctoral candidate Christos Markos, and the corresponding author is Dr. James Yu.

The above works have been funded by projects and institutions, including the National Natural Science Foundation of China, the Guangdong Basic and Applied Basic Research Foundation, and the Guangdong Provincial Key Laboratory of Brain-inspired Intelligent Computation.

Proofread ByYingying XIA

Photo ByDepartment of Computer Science and Engineering, Yan QIU