In ancient Chinese mythology, “Di Ting” (Truth Listener) lies beneath the seat of Ksitigarbha Bodhisattva, pressing its ear to the ground to instantly perceive the slightest tremors within the Three Realms and Six Paths. We have likened Distributed Acoustic Sensing (DAS) technology to a modern version of a “Digital Di Ting,” utilizing thousands of kilometers of optical cables to endow the earth with a sense of hearing. If the optical fiber network represents the “nerve endings” of perception, then the introduction of Artificial Intelligence (AI) technology—specifically the fusion of the AI Agent concept—truly implants a “brain” into this nervous system. Unlike traditional static deep learning models, an AI Agent emphasizes an intelligent entity capable of a complete closed-loop of Perception, Cognition, Decision, and Action, marking the official transition of fiber optic sensing technology from “passive monitoring” to a new era of “active cognitive” intelligence.

In the intelligent era of the Internet of Everything, Distributed Acoustic Sensing (DAS) plays a crucial role in connecting the physical world with digital space. Based on the principle of Phase-Sensitive Optical Time-Domain Reflectometry (Φ-OTDR), DAS can transform existing communication cables into thousands of continuously distributed “auditory nerves,” capturing vibration signals along the fiber at all times and across all domains.

However, traditional DAS systems have long been trapped in a technological bottleneck of “hearing but not understanding,” faced with massive amounts of data (terabytes per day) mixed with environmental noise and multi-source interference, traditional models relying on manual interpretation or simple thresholds fall short, making it difficult to achieve precise identification and risk prediction for complex events. To break through this predicament, introducing AI Agents with self-learning and adaptive capabilities has become an inevitable trend. This deep fusion architecture of “Front-end High-Fidelity Sensing + Back-end AI Agent Intelligent Decision-Making” constructs a complete closed-loop from “Perception” to “Analysis” to “Decision,” driving DAS technology to leap from passive data monitoring to a new generation of intelligent sensing systems with active cognitive capabilities.

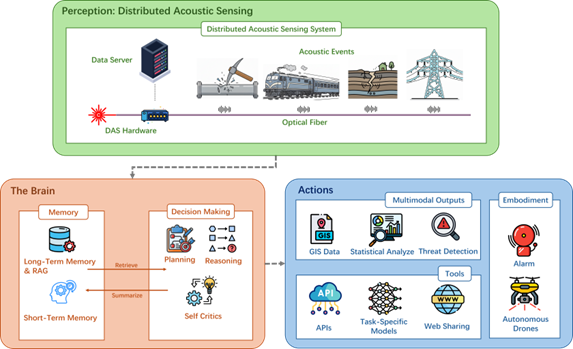

This paper proposes a collaborative architecture integrating a front-end DAS sensing unit and a back-end AI Agent decision-making hub, systematically constructing a closed-loop system of “Fiber Optic Sensing—Intelligent Decision-Making—Multi-Scenario Response.”

Schematic Diagram of the Fusion System Architecture of Distributed Acoustic Sensing and AI Agent

Addressing the urgent demand for high-precision, real-time monitoring in intelligent infrastructure, this paper proposes a collaborative architecture integrating DAS and AI Agents, aiming to break the technological bottleneck of traditional fiber optic sensing’s “passive perception and lack of cognition.” The article systematically reviews the latest progress in DAS front-end signal processing, including high-fidelity phase demodulation, efficient data compression, and multi-dimensional noise suppression technologies, and deeply explores how back-end AI Agents implement real-time interpretation of massive data through deep learning and edge computing. It highlights the intelligent application of this technology in four major scenarios: power system disaster prevention, rail transit monitoring, oil and gas pipeline security, and seismic observation. By constructing a “Perception-Analysis-Decision” closed loop, it effectively solves core problems such as weak signal recognition, cross-scenario generalization, and real-time early warning, providing a systematic technical path and future outlook for the next generation of adaptive intelligent fiber optic sensing networks.

Professor Liyang SHAO, the Deputy Dean of the College of Innovation and Entrepreneurship and a researcher in the Department of Electronic and Electrical Engineering at SUSTech, is the first author and the first corresponding author of this paper.

Paper Link: https://www.oejournal.org/oep/article/doi/10.29026/oep.2025.250012

DOI: 10.29026/oep.2025.250012

Proofread ByNoah Crockett, Junxi KE

Photo ByYan QIU